Figuring out which deployment strategy to choose requires some real consideration. It can sometimes feel like you’re rolling the dice and making a gamble on your level of downtime, versus your ability to roll back when needed quickly.

Whether you’re launching a new version of your application or debuting brand new features within your production environment, this quick guide will help you weigh up the compatibility of each process and understand which rollout method will prove the most successful for your use case.

Deploying without downtime

In today’s cloud-native landscape, avoiding downtime has become synonymous with protecting your bottom line. This is particularly the case in enterprise environments where the goal is to cause minimal to zero impact on end-users who could carry out critical tasks during peak hours.

However, when you’re tasked with maintaining a globally available digital service, peak hours can occur around the clock, making it challenging to schedule disruption-free maintenance windows. A good time to schedule a fix in one region will likely clash with another’s demand for peak availability. Gone are the days when DevOps teams would stage a release over a maintenance window.

In these scenarios, we must design our deployment strategies to factor in constant uptime, requiring us to be creative when managing the other variables. There are a couple of ways we can go about doing this - two of which are blue-green deployments and canary deployments. Both have their advantages and drawbacks. Let’s dive into what each deployment strategy can deliver us regarding results.

What is blue-green deployment?

A blue-green deployment strategy is a release model that hinges on you operating an either/or state between two parallel instances of your application or service to deliver zero-downtime deployments. Blue-green requires you to host and maintain two separate, near identical production environments of your application or service at any given time.

Let’s take the example of one service running behind a load balancer reading from a database and call this our blue environment. When it comes to making changes to our application or service, rather than staging these changes in our blue instance, we can instead stand up a completely separate service, which we’ll refer to as our green environment. This can be done all while our blue environment stays online, serving the previous version of our application to the same number of users we’re used to during peak hours.

Once our new version of our application is ready to be served to end-users in our green environment, we can switch over the traffic via our load balancer to serve the new release out to our wider base of customers.

What are the benefits of blue-green deployment?

Blue-green as a strategy predates cloud-native and microservice-based architectures, making it a solid solution for many scenarios and a mainstay within the world of DevOps.

With two services, you’re then able to have one of your deployments available to your end-users, while your other instance sits idle but hosts the newest version of your application with the latest changes available. When you’re ready for your changes to be accessible to your audience, with your tests satisfied, you can update a flag value to switch the DNS records from the instance running your previous version of your application to the new instance.

Running two separate but identical deployments enables you to test new functionality without sacrificing your capacity for easy rollbacks should there be an issue with the new version of the application. In an AWS environment, this can be as straightforward as using a route 53 DNS with two EC2 instances, each running a version of an API or microservice that you switch between using feature flags. If an incident does occur, you can revert to your previous state and reroute your traffic to your legacy version at the drop of a hat.

Operating this strategy naturally improves your incident response procedure, as with each release, you’re following a similar protocol under which you’d put out a hot release. In addition to avoiding downtime and simplifying rollback procedures, producing two concurrent production environments supports better testing practices as it gives you that alternate and identical testing ground with which to work.

What is canary deployment?

That being said, some dependencies require real user-generated traffic to be adequately tested. After all, even the most exciting staging environment won’t match up to the intricacies and nuances you encounter in production.

For those situations where the different versions of your application require real user traffic, running parallel and concurrent deployments isn’t an option, or if your new code is hosting changes that show a variation on a template which you’d like to use to augment the user experience and measure its impact, a canary deployment could be the way to go.

A canary deployment as a deployment process bears a lot of similarities to blue-green but uses a slightly different method. Canary deployments, often referred to as canary releases, involve rolling out the new features and latest additions to your productions to a much smaller subset of your users before rolling out to your entire user base.

What are the benefits of canary deployment?

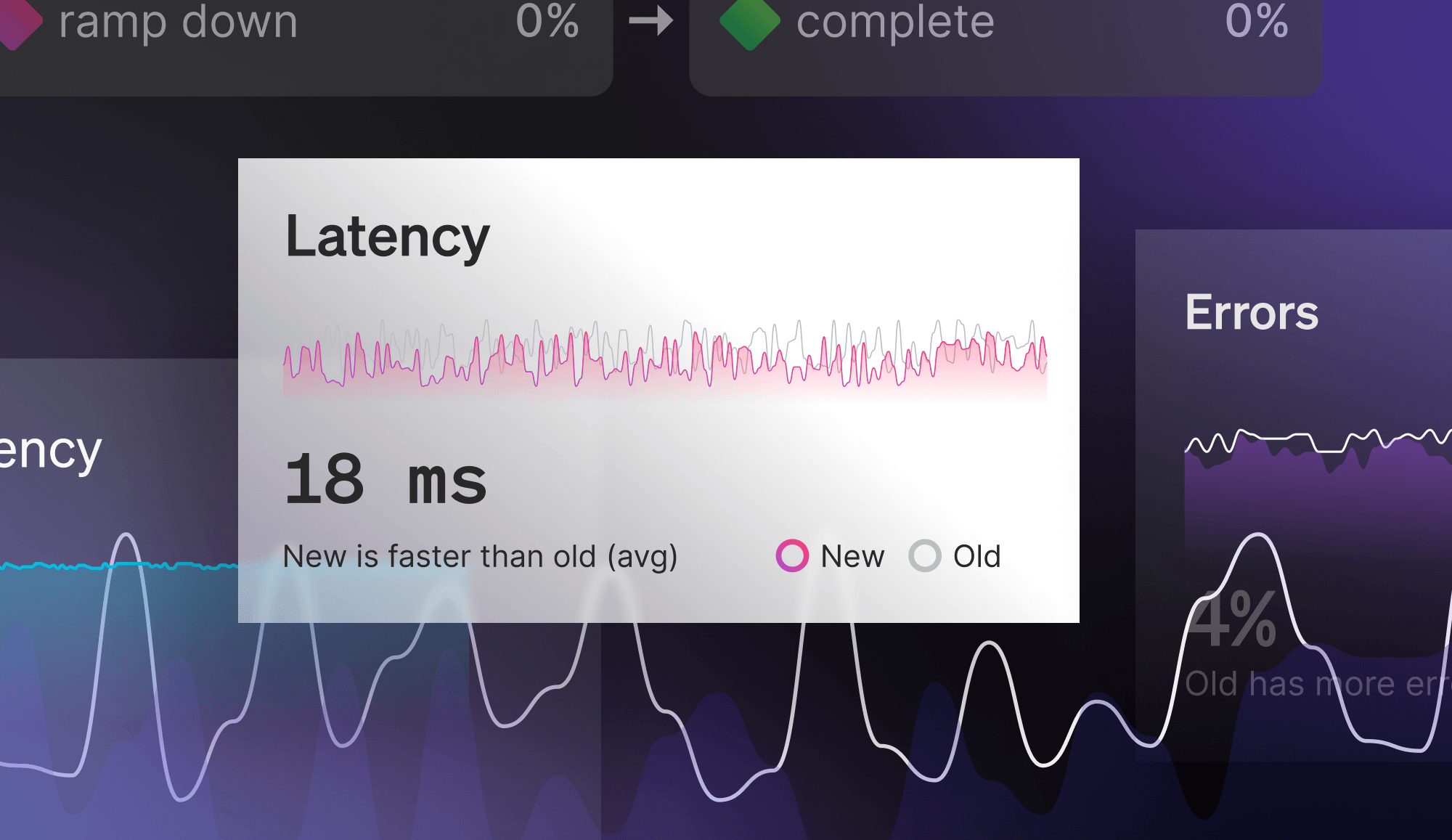

Going out to a subset of users allows you to stage a rolling deployment, a process by which a release is deployed to each server, one by one. In the example whereby we’re rolling out an update to our application deployed in a Kubernetes cluster, performing a rolling deployment allows an update to take place with zero downtime by incrementally updating Pods instances with new ones, with the new pods being scheduled on nodes with available resources. This process supports maximum availability and uptime, allowing you to combat any issues that arise before they can impact you at scale.

Another possibility that canary deployments unlock is the ability to run a/b testing. If, for example, you’re working with a web app, canary-testing your deployments allow you to launch experimental functionality or revamped layouts to a small audience first to examine how they perform against your predefined set of metrics. Suppose your test audience isn’t responding well to the features you preview. In that case, there’s always the ability to catch this feedback early and implement a fix before the experiment goes live to your entire user base.

Choosing between canary and blue-green deployment processes

One of the core similarities between blue-green and canary deployment processes is this method of splitting your build. Both workflows separate each of your instances by version to some degree.

Depending on your use case, there’s a clear case for both being able to support your needs. However, many people find a hybrid approach the best way to get a blend of the benefits of each method. This ‘both worlds’ plan would have us route a small subset of our traffic from our old version to our new instance.

A staged approach executes like a canary deployment with the advantage of enabling teams to test this new environment with real users and observe its behaviour, ensuring that there aren’t any breaking changes with this new deployment. Rolling out in this manner allows us to mitigate any widespread issues by limiting their exposure to a small percentage of our total user base while retaining the option to route back to our previous version at short notice.

However you plan to implement continuous integration and continuous delivery across your software development lifecycle, there are various ways you can avoid shipping risk alongside your product changes. Staging your deployment with feature flags awards you great control and precision when conducting large-scale rollouts.

If you’re interested in learning more about how LaunchDarkly can support your deployments, sign up for a demo.

.png)