On April 23, Dylan Etkin, CEO and Founder of sleuth.io, spoke at our Test in Production Meetup on Twitch.

Dylan shared about what it means to track impact in a production environment, what happens if you don't, and some actions you can take today to move in the right direction.

Watch Dylan's full talk.

"...the longer it takes to discover the cause of a negative impact, the more it will cost to fix, if it gets fixed at all." —Dylan Etkin (@detkin) CEO & Founder, sleuth.io

FULL TRANSCRIPT:

Yoz Grahame:

Hello there. Welcome to Test In Production for April the 23rd of 2020. My name is Yoz Grahame. I'm a LaunchDarkly developer advocate. And our guest today is Dylan Etkin who is the CEO and founder of sleuth.io. Hello Dylan. How are you doing today?

Dylan Etkin:

I'm doing well, thank you. Excited to be here.

Yoz Grahame:

I'm excited you're here. Thank you for joining us all the way over in San Francisco. We are currently in Oakland. It does feel rather further apart than it used to.

Dylan Etkin:

Little harder to get between the two right now, but safely from our living rooms and connecting via the internet.

Yoz Grahame:

Brilliant. And today you are talking to us about impact in production.

Brilliant. Getting some breakup on the screen here at the moment, I think. But excellent. I'm looking forward to hearing it. And see if we have ... We already got a few people watching, I think. Dylan is going to talk to us about impact in production and his work at sleuth.io. We are taking questions. After the presentation, there'll be ... after the presentation is going to be about 15, 20 minutes, something like that I believe.

Excellent. Please feel free to drop your questions in the chat. We will be taking them and discussing lots of things related to measuring impact, to testing in production as the name of the stream implies. And how to measure, how to set service level indicators and objectives, things like that. But for a general intro to the topic, Dylan, please take it away.

Dylan Etkin:

Great. Thank you so much. Like you said, my name is Dylan Etkin. I am the CEO and founder of Sleuth. In a past life, I did a lot of time at Atlassian. I was a developer on JIRA. I ran the Bitbucket team for about five years and then ran the Statuspage team for about four years over at Atlassian as well. I'm going to try and make eye contact with the camera in this new pandemic reality of presentations. And if I forget to do so, it's not because I don't love all of you out there, it's because I'm adapting to this crazy new world. And my cat might jump into our conversation at any given time, you never know.

Yoz Grahame:

She's very welcome.

Dylan Etkin:

Yeah, no, she's cute. Don't worry. It'll be good. Today I'm going to talk to you about impact in production and I'm going to start with a bit of a bold claim. And what I would like to say is that without understanding your changes' impact, you don't know if you are helping or hurting. I think it helps to define what I mean by impact because I think these things could mean a lot of different things for a lot of different people. Often impact, the way that I refer to it, is referred to as an SLI or a service level indicator. These values will frequently be stored in observability platforms like New Relic or Datadog or SignalFx.

Some examples of impact. These are usually things that are very specific to your application or the application that you're building. And they often are really about the effect on the utility that you provide via your application. For example, say in Statuspage, the percentage of emails arriving in a user's inbox in under five minutes from an incident was really important to us. And that was something that we measured and made sure that we understood the impact of. Something like Amazon, the search relevance of the catalog results that are coming back plays a huge impact into the amount of money that they can generate. Say for LaunchDarkly, response time for feature flag requests. Since that's happening in the main line of an application of their customers, that's obviously something that they care a lot about. And then more generally, maybe availability and uptime of a service could be something that you're measuring impact on.

And it really pays to make the distinction that impact isn't monitoring and alerting, but they often overlap. Monitoring and alerting are a lot about the service is unavailable or you have breached a threshold that is unacceptable. Impact is really about, how is your service doing and how is the utility that you're providing functioning and have you made that better or have you made that worse or maybe even neutral?

Hopefully all of you out there are working on a modern team. Maybe you're working on a DevOps team and that means that your team is moving fast. You're doing all sorts of cool things. You guys are probably deploying anywhere between one to 50 times a day. You have a neat CD pipeline set up. You're tracking errors with Rollbar and Sentry or Honeybadger. You have APM with Datadog or New Relic. You're tracking metrics with SignalFx. You're deploying with feature flags so you're using LaunchDarkly of course. And you're storing logs and Docker containers somewhere on the internet. You're doing pull requests and reviews with GitHub and you're issue tracking with JIRA and moving fast is absolutely great. But if your team is changing 50 things 50 times a day, how do you know what is impacting what and how [inaudible 00:05:52] to impact on your application?

I would argue that the longer it takes to discover the cause of negative impact, the more it will cost to fix, if it gets fixed at all, honestly. And the reason for this is that the individual responsible for a change loses context. If you ship something that say, increases your error rate by 1%, if you're told about that within five or 10 minutes, you're going to go ahead and you're going to make that fixed. If it shows up two weeks later, that issue is going to go into your backlog. Now it's going to fight with other issues for priority. And the person who's going to maybe work on that doesn't necessarily have the context they need to regain all of that to understand why that change was negative instead of positive.

And then another issue is that if you're moving as fast as you are on these modern teams, the cause of negative impact is buried in a thousand changes within the span of just a couple of weeks. It's really hard to identify, with all that change, what's going on. And then furthermore, what happens very frequently is that the diminished state becomes your new normal and you don't necessarily, without measuring, understand what your desired state is. And you really run the risk of getting to this new negative state. Oops. They should absolutely be changing. Are they not? Did you see that change there?

Yoz Grahame:

No. Not seeing any changes. Are you still sharing the ... Sorry viewers, please excuse us.

Dylan Etkin:

[inaudible 00:07:47] It should be on some panel stories. Did you see any of my slide changes?

Yoz Grahame:

I don't think so.

Dylan Etkin:

Okay.

Yoz Grahame:

[crosstalk 00:07:57]Let me just check because it was definitely working before.

Dylan Etkin:

I'm going to reload that and see if that doesn't help us. [crosstalk 00:08:11] my initial slide?

Yoz Grahame:

No, I'm afraid not.

Dylan Etkin:

[crosstalk 00:08:15] some amazing things out there.

Yoz Grahame:

Yeah. Could you stop sharing your screen and then share it again?

Dylan Etkin:

I absolutely can do that. Let me stop sharing. [inaudible 00:08:31] Stop sharing and start to start sharing. Sorry, everybody out there.

Yoz Grahame:

Oh, there we go. Okay. Now I can see-

Dylan Etkin:

Now you can see the slides again?

Yoz Grahame:

I can see Google slides.

Dylan Etkin:

Okay. [crosstalk 00:08:46] Do this. You can see it or you can't see it?

Yoz Grahame:

Yeah. Now we can see it.

Dylan Etkin:

[crosstalk 00:08:58] Big size. You can still see it.

Yoz Grahame:

Yep. Brilliant. [crosstalk 00:09:08] do a page back one. Just [inaudible 00:09:10] changing.

Dylan Etkin:

You all probably are wondering why I was just having this endless talk on just one slide. You're probably thinking-

Yoz Grahame:

[inaudible 00:09:18] Very entertaining and fascinating.[inaudible 00:09:22]

Dylan Etkin:

[crosstalk 00:09:22]like pausing moments too.

Yoz Grahame:

Okay. Let's do a very quick overview of those slides we missed and then carry on if that's all right.

Dylan Etkin:

Okay.

Yoz Grahame:

Thank you.

Dylan Etkin:

Well, the bold statement I was talking about impact and what impact is. And really at the end of the day, it's the effect on the utility that you provide with some examples that you may remember from my recent talk. I was talking about just how fast we're moving in terms of DevOps teams and the amount of changes that are going on and tying those changes back to individual impact, and how that can be quite difficult in a fast-moving environment.

And then this one I was just blabbing on about, and talking about how really at the end of the day it's the high time to discovery of negative impact, the costs. And those costs come out in a million different things. And I'll go into that a little bit more now. And that's where we were at. I was going to shift into basically some battle stories. I don't know about you guys, but I like stories, they add context to things. I'll just talk a little bit about some experiences from my past where impact really played into a situation and a lack of monitoring in a high time to discovery ended up costing teams real things.

The first one I'll talk about is just a recent occurrence on Sleuth. I was actually working on a feature, funny enough, around impact. It's a very meta story because we're building a tool that's going out and trying to measure impact on the things that you're doing on deploys. In order to do that, what we need to do is we need to get a bunch of your SLI data and we need to figure out what is the range of normal for you. And then we use an anomaly detection, an outlier detection tool. There's a great tool in Python by the way, called PyOD. It's the Python outlier detection tool and it's got all these modern algorithms just built into the thing. It's great. Long story short, it's actually a bit of a memory hog, though.

I was using this tool to learn about the training data for all of these metrics that we are collecting. I did a deploy at about 3:30 in the afternoon. Working in a modern way, we would hide it behind a feature flag. No code was actually executing through any of that, so it seemed like a fairly safe deploy. But we didn't have any impact detection set up at the time because obviously we were trying to build it.

I went to sleep thinking, "Hey, I have done a great thing and shipped this feature." And then, while I was sleeping, one of our developers in Europe got an alert. And memory had grown across our entire fleet. And you could just see this graph of things going up, up, up. She spent a bunch of time digging into this thing and trying to figure out what was going on. By the time I woke up and jumped online, there was a second developer who had been looking into the problem. They probably wasted about three hours looking into this thing. When we were eventually able to track it down to that it happened at the moment of deploy, it was really easy for myself with the context to turn around and make a change. Basically, we moved the import from global to local and that meant that we were only going to pay that memory cost when we actually needed to use the thing.

But I think the interesting part about this story is that quick detection of the impact meant that the person who had context myself could quickly create a fix. But the real scary part here is that we got lucky. We were relying on alerting thresholds to actually find this bug. And if we hadn't breached that, this probably would have been our new norm. And we would have to just moved on thinking, "Hey, this is how much memory our application takes," which would have had a cost to us. You can see where if we had had impact around memory for this, we would have caught it straight away.

The next story I want to tell is from my days on Bitbucket. Bitbucket gets a ton of SSH traffic, right, it's a source code hosting site for those that don't know. And what that means is that clients are connecting and then doing a certain something and disconnecting over SSH thousands of times a minute. Early on in Bitbucket, we had a very naive implementation. Everything was in Python as well. We would spin up a Python environment we had hacked open SSH to execute a Python script. And I think in the early days we loaded all of Django every time we did that. And that was just a crushing blow to our CPU. You can imagine loading an entire Python environment. Every time somebody is just doing a tiny little Git operation with SSH, took a real toll on our overall fleet. And we were based on bare metal hardware, which meant that we had to manage our resources pretty, pretty thoroughly too.

We had fixed it and basically gotten to back 50% of our CPU by removing the ability or the need to bring in Django. And then at some point, some developer just innocuously added an import that added another thing that it brought in that eventually brought in the entire Django stack. That change didn't breach any alerting thresholds. We weren't monitoring the impact of the CPU usage. And it probably took us about six months to actually find that change. The impact to customers was pretty high because it meant that every time they were trying to do an operation, it took longer. And it was very high to us. We actually ended up spending, I think it was about 50,000, in server costs that we eventually needed, but we didn't need at the time. You could imagine a world where we had actually discovered that impact in the moment we had made that change and we would have avoided all of those issues.

The last example I want to run you through is one that I've seen a number of times on teams that I've run and I'm guessing you have seen too. Which is, on Statuspage, we were sending ... well, a lot of what's Statuspage does is sending email notifications. When a customer creates an incident, we send a bunch of notifications to all of their users to let them know the things are down. And we tried to do that in a very redundant way. We had two email providers. We were using SendGrid and Mailgun. And SendGrid was not set up in a way that it would do DKIM verification. And that meant that some of the emails we were sending were not as truthful or valid as they could be.

We decided to spin up a new project where we would improve DKIM for all of our SendGrid users. And the trick is we did exactly the opposite, right, the whole point of this project and the whole point of the work that we did was to make things better. And we spent weeks of time, we went out to users and we asked them to put in new keys into their DNS. We did all sorts of things to make DKIM processing better. But what we didn't do is we didn't measure the impact of that. So we had stats around the amount of mail that hit inboxes, but we didn't have stats that talked about how many emails were going to spam.

Over a six- to eight-month period, issues would come into support where it looked like some emails were disappearing and we would track them down. We must have used up probably 10 different developers worth of time investigating these issues. And eventually, probably eight months down the road, one of the developers noticed that like, "Hey, this is incorrectly configured and maybe even a third of the emails that we're sending via this provider are going straight to spam for customers."

This is an example where we weren't measuring the impact of the thing that we were trying to fix. And in trying to make things better, we had turned around and made things actually worse. And that was a huge impact to customers because we had asked them to do DNS changes that we needed them to do again. And then the time to resolve this issue was huge. It was probably about 12 weeks of dev time. And have we put impact measurements in place in the beginning, we would have known that the thing we were trying to do didn't actually have the positive effect that we were looking for.

All of these stories have a very similar thread, which is that the longer it takes to discover impact, the longer it takes to fix and the larger the overall cost is. So how do we get better, how do we get to a place where we can bring impact measurements really close and home to the individual? And that's what I want to talk you through now. Is how do we improve, right, what are three things that you out there can do right now today? Here's three actions that I think if you were to take right now, you would just be worlds better than you are probably at the moment. And these are fairly simple things and actually maybe a lot of you are already doing them.

But the first thing that you can do is just to find one or two SLIs with reliable measures. They should be real-time measures and reliable. That's very important. What you need to do is you need to take a good hard look at your application and ask yourself, what is the primary source of utility that the application is offering and what is a statistic that we can capture, in real-time reliably, that would give us some indication of whether we are doing what we're supposed to be doing or not? And once you have that SLI defined, you really need to communicate that with your entire team. Because this sort of thing doesn't work if your whole team isn't bought in and doesn't feel a sense of ownership.

What that means is you have some mechanism for communicating with your team. Maybe pre-pandemic, you had a dashboard up with key statistics. You need to put that up there. Or maybe you have like a Slack channel where you publish interesting information like ... There's some way that you have of sharing information with your team. You want to get that SLI into that channel so that everybody's constantly reminded by the thing.

And then the last thing I would suggest that you do is make impact part of your definition of done such that your SLIs remain positive or at the very worst neutral. And putting an SLI or impact into your definition of done, means that you're pushing every developer to take responsibility for the changes that they're making to your SLIs, right, because at the end of the day, they are your sources of change and they're the ones that need to understand the impact of the things that they're doing.

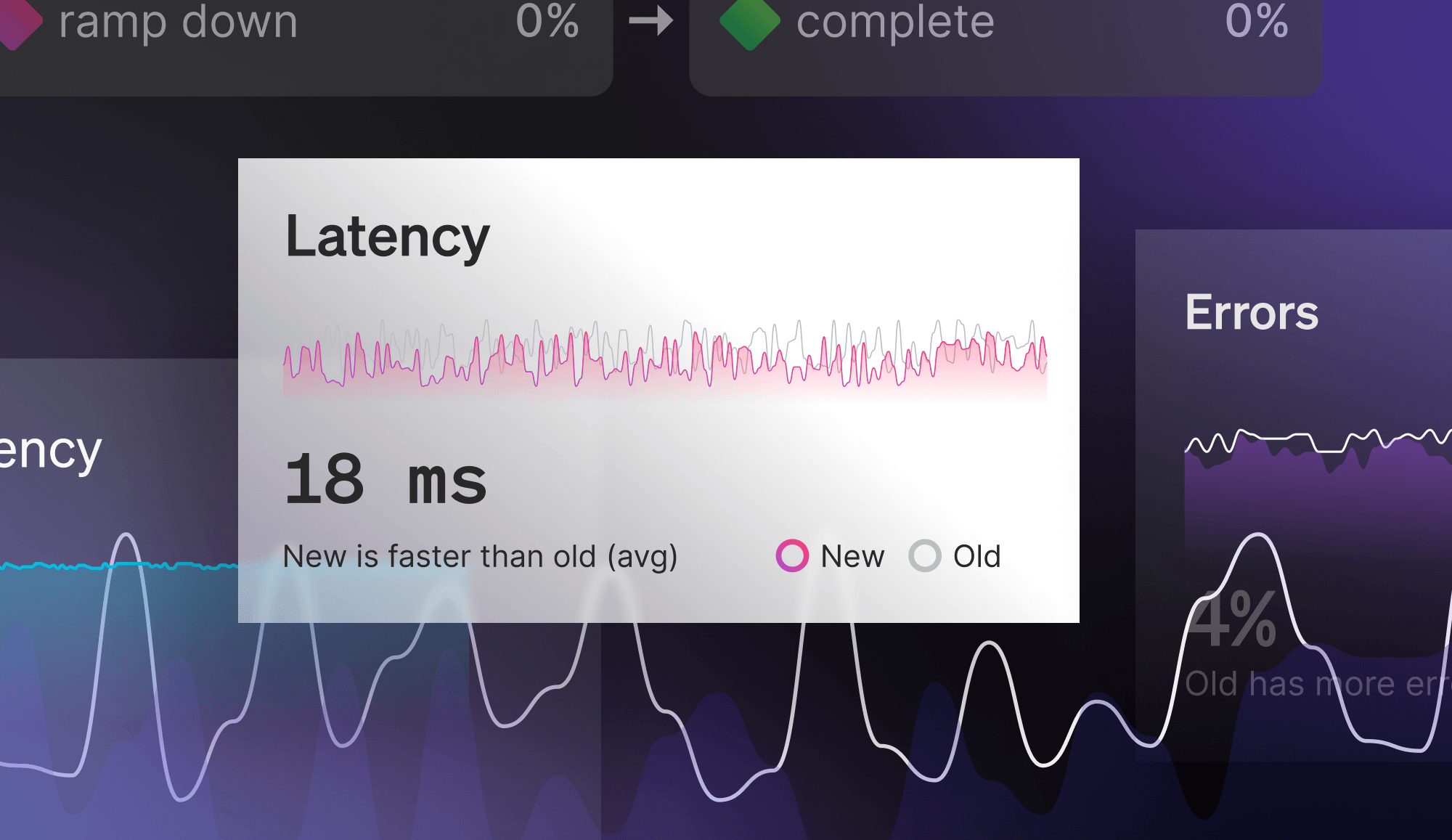

You might be looking at this and thinking, "Hey, I've got like a modern CD platform. I don't need to worry about any of this. Because we're already rolling so far forward that everything is great for us anyway." There's definitely some amazing tools out there nowadays. And they are definitely amazing at addressing the symptom of this. I'm going to argue that they're not so great at addressing the cause. But some things that larger companies are doing or even smaller teams, maintaining a dedicated SRE team that just monitors everything. Their job is to understand impact and to get in there if things aren't going right. And then there's modern CD containers like CircleCI or Harness.io where you're able to set up canary deploys, you're doing continuous verification and you have automated rollbacks.

Often these systems are set up closer to alerting thresholds, so they're not great at solving like the frog in boiling water problem, where the frog's in the hot water and it becomes boiling and then it's in some real trouble. The other thing that they don't do is they don't shorten the time to discovery of incremental negative impact. Again, if you're not breaching some sort of crazy limit and you're just making things worse a little bit every day, this system isn't going to catch that. And also they're just really resource-intensive to set up and maintain. And the downside from my perspective is that it does move some responsibility away from individuals. And at the end of the day, we want to be empowering. DevOps is about empowering everybody on the team to understand the changes they're making. And that needs to be a cultural thing.

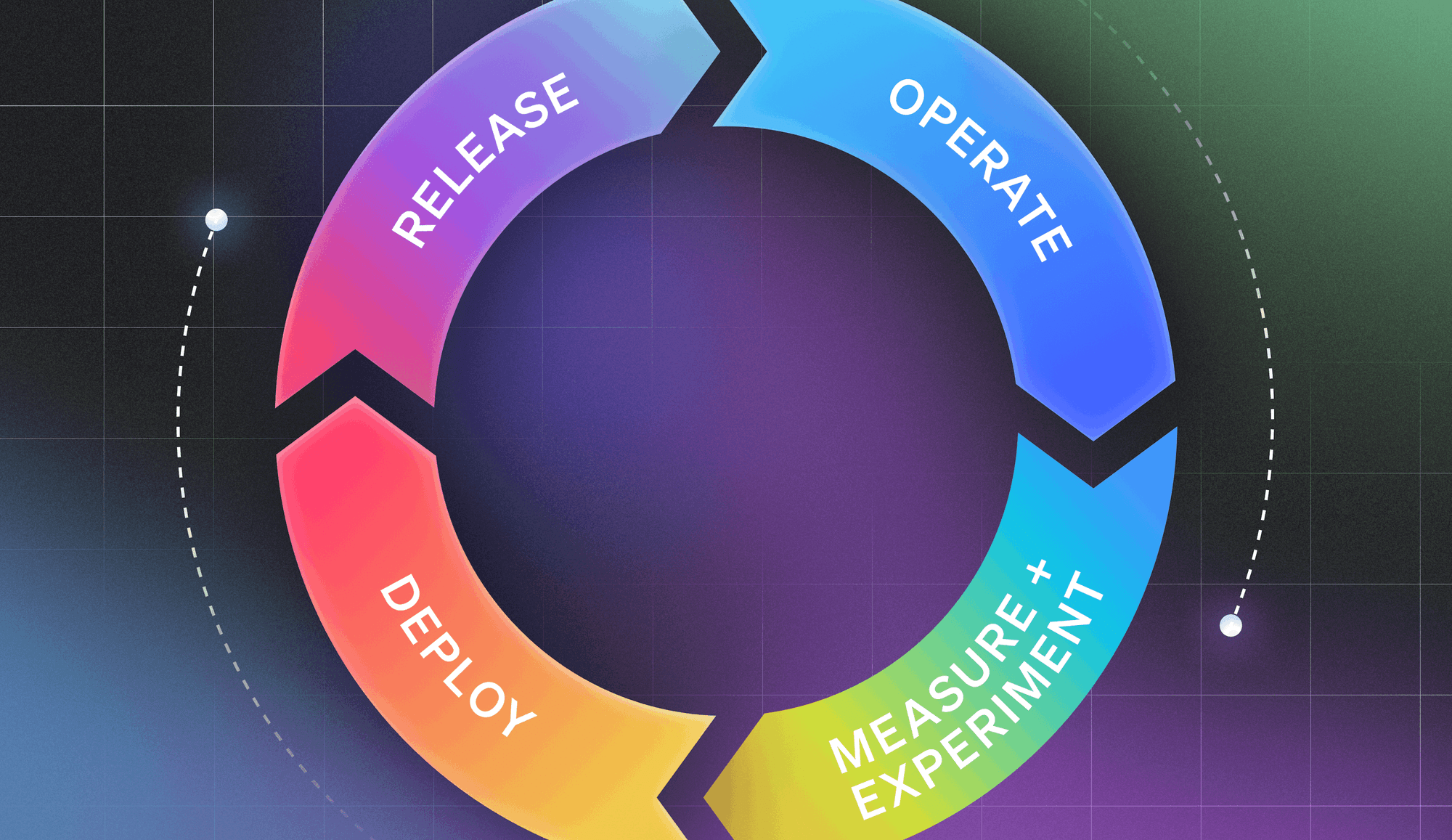

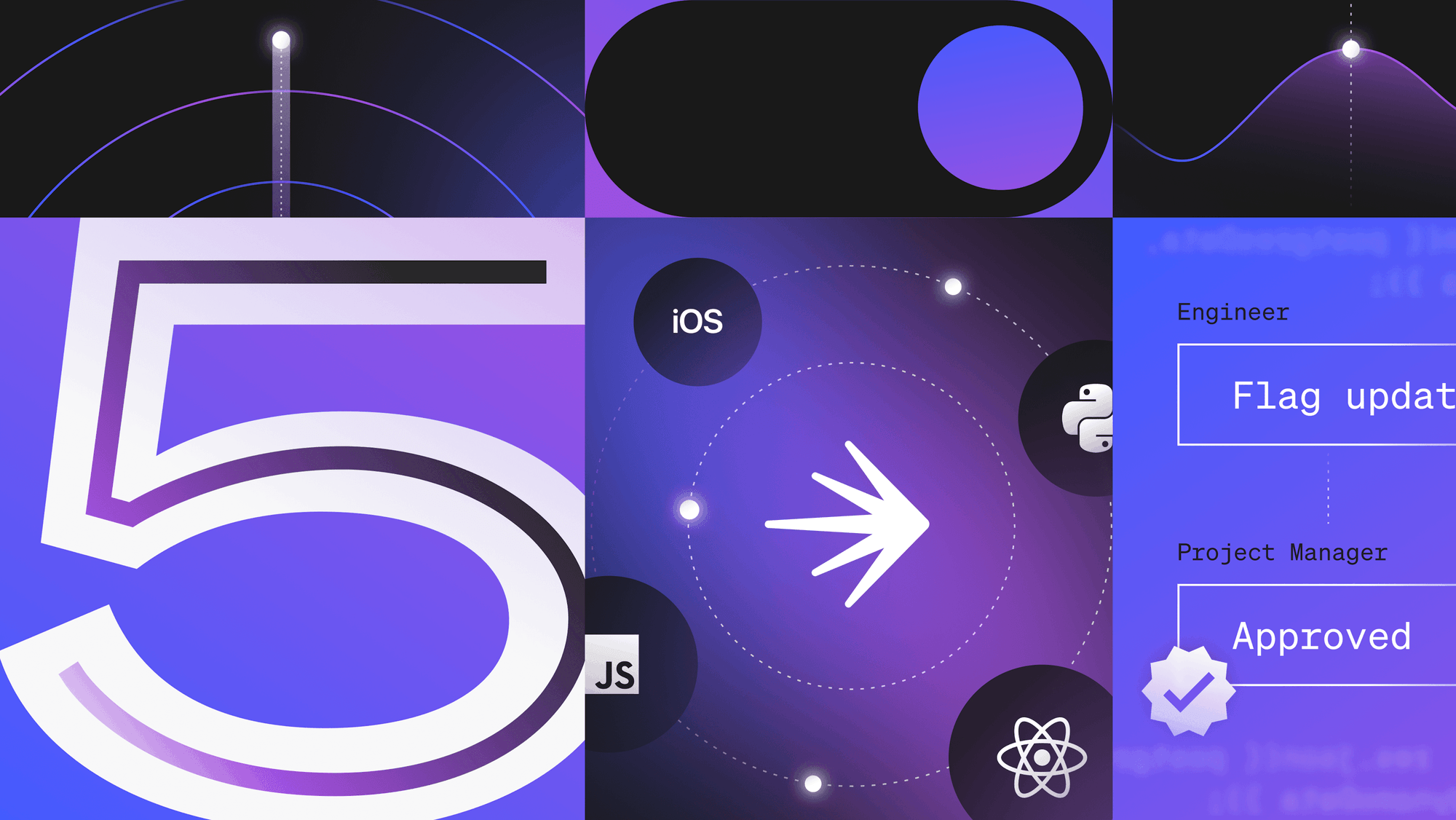

I'm going to talk a little bit about my vision of the future. And how tools out there might be able to support us in a world where we're caring a lot about impact. I am going to talk a little bit about Sleuth. But I think a lot of these things you could do without Sleuth. It's just why would you want to. The key for all of this is tying deploys to impact. You're making changes. Changes can come from any number of directions in our modern environments. It can come from external sources. It can come from feature flags. It can come from code. They can come from infrastructure. And then we want to define these SLIs in some sort of system of record. And then we want to be able to detect anomalies in those things.

Again, it's fine to have a set of normal, right, you just want to understand, have I breached normal? Have I gotten way worse outside of normal or have I made things better? Do we have less errors as part of this change? You really understand how are the things that I'm adding to this system affecting the system. Another key to getting better at this is alerting authors about impact within minutes. What that can look like is if you have some monitoring set up for impact, maybe you alert somebody via Slack. That's what we're doing, in Sleuth, is that when you've made a change and your change has gone out, we'll turn around and direct message to the individual in Slack to say, "Hey, your change went out and according to your SLI, it's healthy. So go ahead and keep having your coffee."

The other thing you need is one place to simply understand all the impact of the changes you've made. As I had mentioned earlier with DevOps teams, we have an amazing array of tools at our disposal but it's unreasonable to think that every individual knows every nook and cranny where we store everything on the internet today. There needs to be a very simple one place to understand, what am I doing and how has that affected things in terms of deployments. And I would argue that the future will not come true if we don't automate, automate, automate. If you have manual steps involved in any sorts of things, it's really hard to understand what you're doing. Are we getting better or worse or the same over time? So you need to have automation in place to support this way of working.

To just sum it up real quick, impact in production, you need to understand your impact in production. You need to know what's important to your service and measure it in real-time. You need to empower developers to make positive impact and we really need to reduce the discovery time down to minutes. And the way that we do that is modern tools for the modern teams that we are working on. And that's what I wanted to talk to you about with the impact in production. And I thank you for your time.

Yoz Grahame:

Thank you so much, Dylan. Can you hear me all right?

Dylan Etkin:

Yeah, absolutely.

Yoz Grahame:

That was fantastic. Thank you. I was talking to Kim, our producer, as you're talking, I'm just going, "This is fascinating and I can imagine using this for all kinds of different things." Before we get into that, just to say, anybody watching, thank you so much for being here. Dylan is going to be sticking around. We're going to keep streaming for the next half hour or so. Please do throw any questions you have at us because this is a fascinatingly diverse topic.

The story you had at the beginning about spotting the outlier. I think it was, you said memory consumption outlier with PyOD, was amazing. How did you have that tied in? What were you doing to feed to PyOD and this is part of what Sleuth does now?

Dylan Etkin:

well, the PyOD is actually an internal library, right, and I'm guessing that the implementation is such that they just do an awful lot of things with memory, right, which is completely reasonable. I'm happy to pay the price of that memory but only when we're using it. Python being a pretty dynamic language meant that we had this import and so even our web containers which weren't needing that library at all,[inaudible 00:26:25] it automatically loads everything up and goes, "Hey, let's pre preload all these things." Was pre-loading PyOD which bloated memory by about 30 or 40%. And like I said, honestly, we just got lucky. We monitored that stuff and we alert on it via AWS. But it only crept above that alerting threshold over time. There was a good six hours where it was fine and we would have never ever, ever known about it.

And then I'm quite pleased that it reached our alerting thresholds because we got a clue. But yeah, the irony was that we were building the tool that had we been done building the tool, we would have had the impact monitoring and we would have been able to see exactly that. We would have been like, "Hey, we're out of norm for memory." We needed to build the tool in order to find the problem that we introduced.

Yoz Grahame:

That's a selling point in a nutshell, really, isn't it? That's fantastic. We've been talking about SLIs, service level indicators. And memory usage being a part of that. Presumably another term I've heard, about recently when watching this stuff, is service level objectives. Could you talk a little bit about that and how having those really helps for spotting these kinds of problems?

Dylan Etkin:

For sure. I think the distinction is really ... It's about, again, that utility that your application is offering, right, the objectives I think are more about like, "Are you trying to respond to a certain type of request?" Let's say for LaunchDarkly, are you trying to respond to a feature flag request in under 20 milliseconds or something like that, right, they're very similar but often they can be, I guess, aspirational. They're a little bit ... I see them a little bit more like your alerting limits, right, if you're saying, "We cannot breach X," well, then it's probably a reasonable to fire off your PagerDuty alerts in the case where you actually breach X, right, and I think that in the industry we've done a pretty good job of capturing things that we need to alert on. And then waking people up in the middle of the night when those bad things happen.

But so much of what we do is really changing things day after day and the rate of change, we want to change things quickly, right, we've discovered that we can respond to incidents faster. We have less breakages, right, when we're making these discrete, tiny changes or changes via feature flags even. But it's really hard to know if you're just creeping up and making things a little worse all the time, right, and I think that's that distinction between the SLI and the SLO for me is one of them is easy to ... I wouldn't mind waking somebody up over an SLO breach but if we woke somebody up because things got a tiny little worse, maybe you meant them to get worse, right, you just need to know that you did it and then make a conscious choice about it.

Yoz Grahame:

It's also that you can see SLOs, give you something to ... if there's a steady rate of change in your SLI that you're heading towards breaching your SLO.

Dylan Etkin:

For sure.

Yoz Grahame:

And that's something that you can notice as well.

Dylan Etkin:

Yeah absolutely. And I think that what we've done today is we've made it the domain of an SRE or an operations person, right, which is really, really against the grain of DevOps. I think a lot of our teams are working and using DevOps practices, right, but [inaudible 00:30:31] on teams that I've been involved in. If I have a team of like 30 developers, I've got like four or five of them that can respond to an incident with confidence. And then the other 25, they definitely have the skills to do so and they have the desire to do so. But it's not necessarily ... they don't understand all the places that all the bodies are buried. And they don't have a single system and they just don't ... they're like, "I don't know. Did I break that, is it normally that way? Should I roll it back or shouldn't I?" We just aren't empowering them with the tools and the information they need to confidently jump in there and do DevOps.

Yoz Grahame:

Yeah. These days especially, with the observability trend, it's very easy to be compiling huge numbers of different statistics and monitoring a huge number. But knowing which of those are meaningful and which of the changes are meaningful is really difficult as you're saying. Especially if you're newbie and you see giant dashboards with 80 different stats on them. This is the kind of thing I'm wondering about the power of this and especially what you're doing in terms of tying impact back to the changes that caused them, right, because there's loads of things that can monitor output and monitor effects. But having that insight into how to trace back to the causes or what may be the causes. I realize you can't directly deduce exactly how the changes are causing the effects. You can, in theory, have some false positives. Is that right?

Dylan Etkin:

I think so. But I think you also hit on another thing that's really important, right, it's the evolution of the change that needs to be captured too, right, and I think we live in a really lucky and wonderful time, right, because we have all these amazing developer tools and a lot of modern teams have adopted them. And I like to think of them as primitives. You have somebody's doing something with ... you're tracking issues somewhere. Maybe you're using JIRA, maybe you're using Clubhouse, maybe you're using ... Whatever, Pivotal, who knows, right, you are [inaudible 00:33:01] your source somewhere, get up Bitbucket, GitLab, whatever. And you're using pull requests for code review. You're doing that. You have some CI system somewhere. You have probably an error tracker. You're doing some form of monitoring and observability. You have these primitives that go into how did these changes develop?

We can find all the JIRA issues and we can find the pull requests and we can find the comments and then we can find the past builds. And we can find all this stuff that when you want to understand whether that was a negative change, how long was it in, how long was it being created, who was involved in that, what were the things that we did, what was the intent? You probably have intents wrapped up in your issues and your design docs and that sort of stuff. And then what was the actual code change? Let me jump to that quickly. It's like the old Git bisect days, but [crosstalk 00:33:56]. And I think you really need that evolution. You need to understand like, "Hey, what was just deployed, was it just a feature flag toggle? And why would that affect performance by 500 milliseconds?"

Understanding the evolution of the thing and then tying that to the impact is key. And you're right, I do think you will get some false positives. We're seeing that actually. The first incarnation of this stuff that we worked on was, integrating with error trackers and some of the smaller teams that are using Sleuth, they're doing a great job because they're keeping their errors very minimal and very small. For most of the time they're running at zero. And then somebody can come and do a drive by and some bot hits them and it generates five errors. And then that's dramatically more than zero and it actually had nothing to do with the deploy. I think there are circumstances where they don't always correlate, but then when you do roll out a change and that change is unhappy, that's often, actually capturing the information that you're looking for.

Yoz Grahame:

Is it able to do ... Because I can imagine say having a sudden traffic spike, right, but is caused by entirely external factors, right, and I suppose in that case, what you care about for SLIs is stats per requests rather than totals. Any kind of things that you can use ... how do you differentiate that? How do you say, "This is something that is caused by a traffic spike and this is ... Because I realized that you can theoretically very easily create stats where you're automatically dividing by the number of requests. But you also want to keep an eye on your totals.

Dylan Etkin:

I think that that comes down to the team, right, one of the things that I was trying to make a point of in that presentation was the SLIs are very personal. They are tailored to the application. They mean something to you, they don't necessarily mean something to somebody else. There are certain inputs that you can derive automatic as lies for like error rates. And just how many errors do you normally have versus how many errors are you having now? Or say response time, right, that's a pretty obvious one where you're like, "Normally we're in this range and for some reason we're outside that norm." But I've seen really complicated usage of Datadog where somebody is sending in ... Or the Statuspage example is a great one. The percentage of emails that hit end users inboxes within five minutes, that's not so easy.

We had basically Mailgun and SendGrid sending us web hooks that were coming back and telling us, "I did deliver that thing," and then we had to go and say, "Okay, we had sent it at this time. You told me you delivered it at this time." That time between the two is [inaudible 00:37:03], right, and we maybe only would get that calculation an hour or two later because maybe [inaudible 00:37:08] were behind. And then we had to smooth it out and turn it into a percentage and whatever. But at the end of the day, we had a number that we could monitor but it was very customed to us, right,[inaudible 00:37:18] moving and stuff I think ends up being a part of the responsibility of the team to understand what's important to them.

Yoz Grahame:

Right. Just to recap quickly. If you are just joining us, Dylan Etkin, CEO of sleuth.io, and I am Yoz Grahame, Developer Advocate at LaunchDarkly. We're talking about measuring impact to changes in production. We're going to be here for another 20 minutes or so. If you have any questions, please do drop them in the chat and we're very happy to address them.

We're just talking about SLIs and deriving new SLIs and especially working out the value of them. I can imagine that it's very tempting. You tell me, is it worthwhile trying to automatically derive correlations, right, let's say you've got your measuring 200 different stats in your observability system. Is it worth trying to derive correlations between rise in one stat versus SLO problems or is it going to end up causing more false positives than you think?

Dylan Etkin:

You mean correlation between different SLIs basically?

Yoz Grahame:

What I'm thinking is that for SLIs ... well, let's say you're doing observability at different things and you're tracking a huge number of stats. And then you pick out a few and you say, "Right, those are our SLIs." Is it worth trying to derive new SLIs from existing stats, as it by watching correlations in terms of, "Hey, when this starts going up five minutes later, we noticed that the error start happening."

Dylan Etkin:

Right, right. I do think so. And I do think, if you're going to get this right, you have to account for that, right, because some people have a pattern that is pretty normal. They do a deploy and maybe things are slower while their workers warm up or whatever it is. Or for some reason they're maybe doing a swap over that's not so great and they're generating a few errors every time they do a deploy. And that's just the nature or way that they're doing their deployment or that sort of thing. And I think that those tend to follow a curve that looks similar.

The way that we're thinking about that is that we're doing a lot of sampling and then we're hearing that the sample times to each other. We're saying, "Let's compare the post five minutes of deploy to always being post five minutes because maybe at post five minutes, you're at this level, whereas two minutes after you're at this level. And then like 20 minutes later you're down and back at this level." And do you think that there can be these correlated spiky behaviors? But if you're going to get true data, you don't want to cry wolf just because you're seeing something that's normal.

Yoz Grahame:

Yeah. How about severity values, right, let's say you want certain kinds of ... if you see certain bad failures and you're in a team where they're dealing with all kinds of different problems regularly, is there a way to say, "Right, this is a [inaudible 00:40:50] one," sorry, that should be clear. For those watching, we're talking about when you've got a large number of tickets going to your SREs, to your site reliability engineers, and they need to prioritize which ones are the ones that are hurting most. How would you do that with this kind of automated impact measurement?

Dylan Etkin:

Right. The way that we're thinking about it is ... Again, because we're using anomaly detection, we get a sense of how anomalous your current data is. What we've tried to do or at least the way that we're trying ... I think that raw values are useful but there's observability ... The place that you're storing this information is the right place to get that raw value. If you want to do some real deep dives into there, that's probably where you should be doing that information. What I think is needed in this day and age is just some sort of abstracted sense of that. Where we're saying, "This is healthy or it's on its way to not healthy [inaudible 00:41:52] or it is unhealthy."

And the degree of which category you fall into is the degree of the difference between what is anomalous, right, these detection things can tell you you're outside of the norm. And then it can give you a sense of how far outside the norm you are. And I think that that's basically how you can classify it. But it's an interesting question that you asked around, how does this play into an incident? Because I think you start to cross that boundary between impact detection and alerting, right, which is interesting.

I think of most SREs that I have either worked with or managed, are very much focused on the alerting side of it and incidents where we're like, "Okay, we are hard down or this function out of the utility that we were providing for this thing is now zero and that's unacceptable," or it's a shadow of itself in terms of what we normally provide versus the slight negative impact that maybe a developer's change made.

I do think there's something there, I guess. I think it's an interesting area and to see how, honestly, how we evolve in terms of trying to ... Because we're trying to empower developers to be DevOps people. And then there's certainly people that are acting as your DevOps SRE frontline engineers that are dealing with incidents today. There's some overlap between those. I see a future where, again, we're tracking deploys and we're trying to say, "Here are all the important things that happened from this deploy." Well, if the deploy caused an incident, that's certainly something you want to be able to capture and understand, right, so you can go back and say, "Why," or even just, "Quickly, let's roll this thing back."

Yoz Grahame:

Actually, there's an interesting question here from Johnny Five Is Alive, saying [inaudible 00:43:57] we were talking about Sci-fi earlier and its impact on the technology industry with your 2001 poster in the background there. For short circuit fans, they say, "An impact assessment in a waterfall environment traditionally takes a lot of time. What should be the timeframe for an impact study in an agile environment or using a DevOps framework?" Presumably, and this actually ties into something I was wondering about as well, which is ... What you were talking about, what I'm guessing for Sleuth and for the SRE impact is you're talking about minutes. You're talking about events that cause change over a few minutes. Can you use the same techniques for things that where the impact is measured across weeks or months?

Dylan Etkin:

It's a good question. I think the difficulty is dragging the signal out of the noise, right, if you were working in a DevOps environment and you're doing continuous deployment, then you have so many frequent changes that will have a lot of noise and we need to draw some signal out of that. But because we're doing discrete changes, we can try and tie that information in that shorter time span to the impact that we're making. When you get a impact that is happening over a very long timeframe, especially if you're in an environment where you're moving that quickly, I think it's really difficult to correlate the change, the cause of the impact to the overall.

I don't know how solvable that problem is, but what I understand is happening to our industry is that DevOps is just becoming super mainstream. I'm watching this over the last 10 years, and for the longest time it was like a bleeding edge thing. And now you just see that all teams, even large enterprise know that they need to move to this way of working and teams are trying to adopt continuous delivery. And I think as soon as you get into that area, that range where you're deploying at least, let's say like once a week, that's when this sort of technique can start to become really powerful and you can empower individuals.

Yoz Grahame:

Yeah.

Dylan Etkin:

[inaudible 00:46:25] Johnny Five's question though. Can you repeat the question, I thought [inaudible 00:46:28]

Yoz Grahame:

Sure. The question was, an impact assessment in a waterfall environment traditionally takes a lot of time, what should be the timeframe for an impact study in agile environment or using a DevOps framework?

Dylan Etkin:

Right. And I guess just related to the timing, I do think it should be minutes or at least an hour or something along those lines. Basically, what you want to do ... It's like tests, right, it's a lot like CI. You're a developer and you're trying to build stuff. We have tests that gate your ability to move things into a production environment. That gives you that immediate feedback, right, if you've made a change or you've made a huge refactoring and you've killed all the tests, you don't move on to the next thing and start working on something else and lose all the context about what you're doing. You turn around and fix that test and you realize, "Oh, I'm actually not done with the work that I'm doing here because I need to pass this gate." And similarly, when you get things into production, in a perfect world our tests would catch everything; that's not a world that I've ever lived in. And that's the name of the podcast, right?

Yoz Grahame:

Right, exactly.

Dylan Etkin:

[inaudible 00:47:38] We need more safety nets around that and that has to be quick. Otherwise, DevOps is going to move on to the next thing. We need to make it part of that definition of done. It needs to be within minutes or most like an hour or so.

Yoz Grahame:

Right. And I love that with this, you're able to tie back ... One of the things that really helps with the DevOps movement is if you are able to tie back to the causes and see who owns those changes. Then you can alert those people, give the information that is most useful to them.

Dylan Etkin:

[inaudible 00:48:12] software development. But at the end of the day, the team is made up of individuals doing things. And that's how we get changes in the systems even at large companies where we've got 10,000 developers. Individuals are making these changes.

Yoz Grahame:

Thank you for bringing up testing in production, which is obviously something that we talk a lot about and we infuse about. Especially to being able to do things like do a percentage rollout of a new feature and watch the impact of that. If you're doing a canary rollout, 5% or 1% rollout or even smaller depending on your normal traffic sites, how would you do this kind of impact detection with that?

Dylan Etkin:

Yeah, that's an exciting area and honestly one that I can't wait for us to move into. Because when you have a more complicated environment set up, there's so many interesting things that you can do about tracking that change through the different deployment environments and the impact that it has had in each of those environments. You can start to get a profile of ... You can almost think of it as like a unit, right, you've got this unit deployable unit that's making its way through your gauntlet of different deployment environments. And you can get more and more information and build more and more confidence. That's the idea of having it anywhere, right, it's that you're trying to gain some sense of confidence that this change is going to do the right thing as it makes its way to your users. I think impact applies exactly the same way in each of those. And you start to understand, "Okay, well, in our canary environment for 5%, impact was normal or whatever." And then you can convince yourself, "That's because 5% of our users did run that code path so we're feeling good about those numbers or we're not."

Yoz Grahame:

So being able to normalize to a full percentage from the sample.

Dylan Etkin:

Yeah. And I also think, honestly, not necessarily related to impact, but just understanding how a changes flow through your different environments is something that a lot of teams don't necessarily have insight into today. And understanding like, "How long has a change lived inside of our staging environment?" Like, "How long has it even spent making its way to production, right, is this something that has taken three weeks to get there or has it taken three hours? And how fearful should I be based on the amount of involvement? How many times did this thing go into this environment and back into this other one and then back and forth and whatever and go through revisions or did this thing flow straight through?" I think that's information that you could use to determine how risky that change might end up being or how much impact it might have on your production system at the end of the day.

Yoz Grahame:

Yeah. That ties back to us to ... also many things about, as you were saying, that trying to extrapolate total impact [inaudible 00:51:18] And one thing also, was wondering, when we're talking about ... Well, firstly the point you were making about tracking how long things take to go through staging. I think it also ties into Johnny Five Is Alive's question about the timeframe for progress through an agile framework. It really matters there and comes out. Are you able to use user behavior for SLIs? We talk about automated ... For most of these, what we've been talking about is things like page load time and memory usage or whatever. But if you were doing, say, I want to know how often users are clicking on this thing and that's ... We're starting to head into UX and possibly product and marketing stats. Is there value in using those for SLIs and SLOs or is it just too much noise?

Dylan Etkin:

No, definitely. I don't think I mentioned this on my slides, but I had it as a note in my bullet. Which is, I think there's a world in the future where a developer can specify, maybe in a pull request description, that this is something that is part of this feature that I want to monitor. And this is important to the feature. And maybe it's click rate or time spent hovering over an element or whatever it is that they're instrumenting in some observability platform. And saying like, "That's what I want you to report back on me." When this change has gone out, some automated system goes off and says, "Cool, I saw the spec that you wrote up in that thing. I know that I'm going to go drag that impact value from your systems. And then when I turn around and talk to you about your deploy after five or 10 minutes, I'm going to tell you the health of your change overall and specifically related to that thing."

I think that has to happen, right, because it's not always the case that you're making a change that is going to affect the overall, the high level indicators for the overall service. You might have something very specific to you. And I think that's huge [crosstalk 00:53:38] we know we've done the right thing.

Yoz Grahame:

Right, exactly. That's what it all comes down to, right, is that going, "Did I make things better or worse, should I roll this back?"

Dylan Etkin:

And a huge part of that, honestly, is communication. I always make the analogy to something like JIRA or an issue tracker. The reason that we use these things is we want to talk about the thing that we're about to do, right, we want to have the ability to comment on that and make comments on it and understand what is the progress of that thing and like, "Are we doing it well, are we doing it poorly? Maybe I want to talk to a product manager about that thing and I want a platform to capture all of the information around that."

Dylan Etkin:

It's the same with deploys and the impact that we're having on our services. We need a mechanism for having that conversation. We need to collect a lot of the information. Which is, the important information that goes into making that thing up and then we need to be able to have a conversation about it. So we can say, "These changes made things worse for this reason. And this is the thing we need to be looking out for." Or, "We've deployed this thing, we've changed this feature flag and that's part of this Epic," so that you can talk to some product manager to say, "Follow along with this so you can see how far we are in the Epic," right, we're doing these, we're continuously deploying but we don't yet have the tools to help us have that cultural conversation, the sharing conversation, that we have with some of the other tools out there.

Yoz Grahame:

And that is so valuable. Is that what you're talking about what you're doing is bringing new nouns, new ways to be specific and communicate very quickly and easily about impact. And this is something ... Our industry I think rightly gets criticized for having too much jargon and too many acronyms. But being able to be specific and unambiguous about what a problem is, what a solution is, why changes have happened. This kind of impact measurement gives everybody, not just the developers, mental tools for explaining, discussing, theorizing about how to make things better or what is making things worse.

Dylan Etkin:

And what goes to the change. I think once you can be articulate about anything, then you can share it and then you can [inaudible 00:56:11] about it. And you can start to ... I always think of the folks that are in marketing. They're doing a lot of the hard work for developers and developers tend to be, no offense, I'm a developer too, somewhat shit at communication, right, extracting information out of us can be pretty painful.

Yoz Grahame:

Oh God, yes.

Dylan Etkin:

I think developers really would like to be acknowledged for the work that they're pushing out and the things that they're accomplishing. And marketers would probably love to be able to acknowledge that. But then there's this disconnect in terms of the deploys and the things that they're pushing out. Again, a communication tool could be a great way to bridge that gap where it's like, "Look, my work is dependent on your work." And like, "I don't want to keep bugging you because you get really mad at me when I bug you. So maybe there's a way I can know when you've actually made progress on this thing so that I can start my job," and not get yelled at.

Yoz Grahame:

Yeah. As you were saying, things like JIRA or other issue trackers and task trackers ties that all together in terms of having ... It ties together in terms of while you're still creating [inaudible 00:57:25] And then having ways to talk about the effects once something is delivered. And I can see it is so valuable.

Yoz Grahame:

This is something also interesting talking to Kim, our producer, about this, who works in marketing. And I was talking to her about this the other day. And one of the things that fascinates me is that, engineering, we tend to be seen as the technical scientist types. But there's so much more science, proper science, in marketing than there is in engineering, right, in terms of marketing [crosstalk 00:58:04]

Dylan Etkin:

You're not supposed to say that. You're letting the cat out of the bag. We're all about the science, Kim.

Yoz Grahame:

Honestly, we really do know whether tabs or spaces is better.

Dylan Etkin:

Absolutely. No, there's a definitive answer. We're a hundred percent sure of that. Don't worry. It's fine.

Yoz Grahame:

That was that. It was Kim's gag. It's like, "You can't even decide whether it's tabs or spaces are better. Don't talk to me about science." Yeah, busted. Completely true. We should wrap it up there. But this video is going to be on YouTube and on our blog in the next couple of weeks. And thank you so much Dylan for joining us and giving us a fascinating talk.[crosstalk 00:58:44] Thank you. And this is sleuth.io which is making these kinds of tools available directly linking cause to effect and showing what's caused impact changes. This is fabulous.

Yoz Grahame:

Thank you everybody who has joined us today. We will be back next week with more testing in production. And do we know who our speaker is next week? [inaudible 00:59:15] Hopefully next week. That is still [inaudible 00:59:19] the possible changes. But it will doubtless be fascinating and thank you so much Dylan. I'm looking forward to having you back sometime to talk about Sleuth again and where you're at because it's a really cool product and technology.

Dylan Etkin:

Thank you.

Yoz Grahame:

Thank you very much and everybody see you next week. Cheers.

.png)