Editor's Note: This post was recently updated from a previous version.

A Brief History of Running Apps

There was a time, not too long ago, when delivering apps was dependent on significant manual work to get up and running. The step-by-step flow of taking the compiled application code, delivering it to a server, and configuring all of the adjacent components/technologies (CPU, memory, storage, networking, etc...) was a careful process, with a lot of room for error. Beyond the infrastructure configurations, creating scalability was manual as well. Duplicating these processes across multiple servers created significant operational burdens for infrastructure teams.

Automation tools and configuration management platforms helped with aspects of this, but still didn’t solve for many of the gaps in replicating the infrastructure configuration. As the technology industry matured, containerization solutions like Docker emerged as a solution for quickly running apps while simplifying the way you manage many of the infrastructure components.

Organizations that leaned on this ecosystem were able to quickly accelerate the way they delivered applications, but ultimately were still faced with manual tasks around automation of the container service itself. To answer this challenge, container orchestration platforms (and in some cases PaaS, or platform-as-a-service, which we’ll get into below) arose to provide solutions not just for running containers, but KEEPING them running, while answering problems like automation and scalability in a first-class way.

What is container orchestration?

As I alluded to above, container orchestration is the practice of automating the various concerns around container “running.” The most common container orchestration platform in the cloud-native world is Kubernetes, but platforms like Apache Mesos, and even docker-compose represent solutions in this space. Public Cloud platform services like Microsoft Azure Container Service and Amazon’s Elastic Container Service also provide a way to run containers in an orchestrated fashion.

We often get focused on simple “run the app” experience, but the area where container orchestration really shines is in the automation of dependencies that exist alongside the running state. These dependencies can often include things like...

- Networking/DNS configurations

- Resource allocation (storage, CPU, memory)

- Replica management (multiple running workloads to protect against single points of failure)

In environments where container (or even more cloud-native services) aren’t adopted, these tasks are all typical multi-touch point interactions across multiple teams. Orchestration platforms free up engineers and infrastructure operators from these repetitive and predictable tasks. Beyond these “dependency” examples, there’s the core app engineer business of (re)creating, scaling, and upgrading the running application (or their containers).

The ease of use of container operations has led to their adoption becoming a default path for many organizations. From an operations standpoint, it’s very simple to create and run container workloads and when you introduce the concept of orchestrating those workloads, the management time for the individual services is significantly reduced.

The practice of container orchestration becomes even more valuable as teams adopt a more microservices architecture approach to building their application platforms. Even small applications can still consist of dozens of containers. Container orchestration platforms provide you with a mechanism for managing the lifecycle of containers and ensuring the repetitive tasks are managed intelligently by the system, oftentimes in a scalable and declarative way.

Key Takeaways

- Automation gave us a standardized way to deploy and compile application code and now the focus has grown to include keeping applications running, and simplifying iteration.

- Containers themselves have had their own evolution between a number of different platforms (and vendors), with Kubernetes being the most widely adopted at this point.

- Container orchestration platforms focus on keeping workloads running long term.

- Many focus on simplifying the interaction between dependency platforms, by integrating platforms like Networking/DNS, Resource Management, Replicas, and even new workload rollout processes.

Container orchestration platforms

Container orchestration is just a concept. To actually implement it, as mentioned above, you need a container orchestration platform. These are the tools that you can use for container management and for reducing your operational workload.

Imagine that you have 20 containers and you need to gradually upgrade all of them. Doing this manually, while possible, would take you quite some time. Instead, you can instruct container orchestration tools via a simple YAML configuration file to do it for you (this is the declarative approach). That’s just one example. Container orchestration platforms focus on everything that’s needed to keep your containerized application up and running.

Container orchestration platforms will restart crashed containers automatically, scale them based on the load, and make sure to deploy all containers evenly on all available nodes. If one of the containers starts misbehaving, for example, eating all the RAM available on the node, the container orchestration platform will make sure to move all the other containers to other nodes in the cluster to protect from greater instability.

Do you need to implement service discovery or make sure that specific storage volume is accessible to specific containers even if they move to another node? The container orchestration platform can do it for you, in some cases. Do you need to prevent some containers from talking to the internet while only allowing others to talk to specific endpoints? You’re covered here as well.

Managed vs. self-built (homegrown)

You can create a container orchestration platform from scratch, all on your own or even leveraging open source platforms. In most of these cases, you’ll need to install and configure the platform yourself. This, of course, gives you full control over the platform and allows you to customize it to your needs, but also carries with it the burden of care and feeding the platform.

On the other hand, a managed container orchestration platform is when the cloud provider (most commonly) takes care of installation and operations while you only consume its capabilities. In most cases, you don’t need to know how these platforms work under the hood, and you only want them to manage your containers. In such cases, managed offerings come in handy and save you a lot of time. Some examples of managed container orchestration platforms include Azure AKS, Google GKE, Amazon EKS, Red Hat OpenShift, Platform9 or IBM Cloud Kubernetes Service.

Depending on the choice of the platform, the list of the features will differ. However, on top of the platform itself, you’ll also need to think about a few additional components to create a full infrastructure solution. A container orchestration platform, for example, isn’t responsible for storing your container images, so you’ll need an image registry for that.

Depending on your underlying infrastructure and if you’re running in the cloud or in an on-premises data center, you may need to implement load balancer technologies separately, or other high availability architecture resources. In some cases, these may also be managed by the platform itself. For example, most of the managed container orchestration platforms will automatically manage cloud load balancers or other downstream cloud services (i.e. storage platforms, DNS, etc...) for you.

Container orchestration and microservices

Now that you know how container orchestration platforms work, let’s take a step back and talk about microservices. It’s important to understand the concept of microservices because container orchestration platforms won’t work very effectively with applications that don’t follow basic microservice principles. Now this doesn’t mean that you can only use a container orchestration platform with the “most modernized” microservice applications. Ultimately, the platform will still manage workloads correctly—you will just ultimately see the biggest “uplift” when your architecture is optimized as well.

What are microservices?

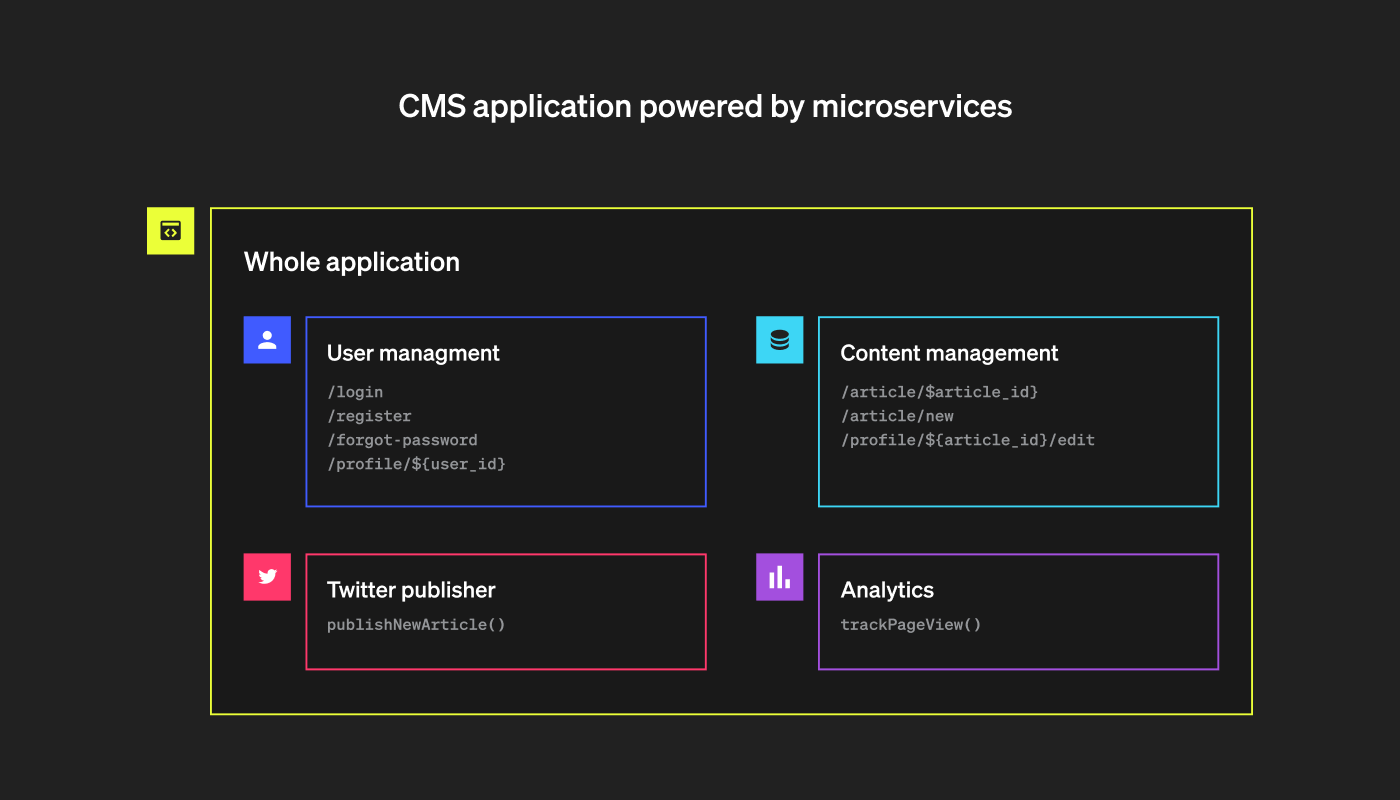

Let’s start with what microservices aren’t. Traditional, old-fashioned software is built as one piece (i.e. the monolith model). If you, for example, need to make a very simple change to, say, the color of one of the buttons, in one component of your application, you would have to redeploy the whole application with that change added in. In a microservices architecture you split this monolith into smaller, more manageable pieces.

With microservices, whenever you need to make any change in the application, you only need to test and redeploy one of these small pieces. This gives you the ability to make changes quicker and easier. That’s just one of the many advantages of microservices—the idea of breaking the system apart into smaller and more manageable chunks.

Caption: Example of a content management system (CMS) application running on a microservices architecture. "User management" is one microservice, "Twitter publisher" is another microservice, and so on.

Advantages of microservices

Splitting your application into many small individual pieces brings many more advantages than just the example above. One application doesn’t need to be written in one singular language anymore. The microservice components can be built in each developer’s favorite language. Teams can also implement features and bug fixes faster since they don’t need to wait for others. You can easily test new features ad hoc by replacing just one microservice. Scaling is way easier and more effective since you can scale only the individual pieces of your application that need scaling. Loads on your application can be distributed more evenly by properly placing microservices.

But with all these benefits comes complexity too. In order to make these individual pieces work as one application, they need to talk to each other. This is usually done via API configurations between the tiers. So, instead of focusing just on the code, you need to start taking care of networking and communication between microservices. You also need to build each microservice separately, and if you choose to have different languages and frameworks, the building process won’t be the same for all of them. You need to spend extra effort in creating good integration tests. But you can focus on all the benefits of microservices and offload most of these extra tasks by using a container orchestration platform.

Docker containers

Packaging your microservices into Docker containers is a popular way of containerization for your application. Microservices is just a concept and relates to the way you write code for your application. Creating your application in small pieces and connecting them to each other via various API methods (most commonly REST) is what we call microservices architecture.

To run a microservice, you still need everything that an application normally needs: the kernel, some system libraries, runtime, and perhaps a server application to run it. Traditionally, you would need to install all of these dependencies on your server. Docker containers let you package all of that into a container. It’s worth mentioning, however, that containers are not like virtual machines (VMs); they all use the same underlying host OS kernel (and some libraries too).

Unlike VMs, containers are very small, giving you portability since many of the underlying system level processes are made available by the host itself. Starting them or restarting them takes seconds.

Note that Docker isn’t the only tool that allows you to run containers. In fact, nowadays, many container orchestration platforms are starting to migrate from Docker to competitors like containerd or Podman. They all, however, generally work almost the same way.

Docker Swarm

Now that you know the basics, let’s discover some of the most popular container orchestration platforms. Docker's own Swarm mode is built directly into the Docker engine itself. It allows you to enable basic container orchestration on a single machine as well as connect more machines to create a Docker Swarm cluster. Since it’s built into Docker, it’s very easy to start with. You only need to initialize the Swarm mode and then optionally add more nodes to it.

It works similarly to Kubernetes (more on that below), following the manager/workers model. All the management and decision making is done by a swarm manager(s), and containers are run on nodes that joined the cluster. The main benefit of using Swarm mode vs. plain Docker is high availability and load balancing. You no longer have one node where all your Docker containers are run. Instead, you have multiple nodes and a swarm manager that ensures all the containers are spread evenly among them.

Docker Swarm is good and easy when you are just starting with Docker. You can keep using docker-compose for managing your Swarm. But it offers much less than Kubernetes, and there aren’t many managed Swarm offerings. These days, container orchestration has largely moved on from Swarm in many (but not all!) cases.

Kubernetes

In recent years, Kubernetes has grown to become the dominant of the orchestration methods. Kubernetes was created by Google in 2014 but got really popular in the last few years. It's an open source platform for deploying and managing containers. Its main job is to keep the desired state, which you define via YAML configuration files. It not only keeps your containers up and running but also provides advanced networking and storage features. Kubernetes will also do health monitoring of the cluster. It’s a complete platform for running modern applications and many advanced teams have begun looking at Kubernetes as a platform for building platforms.

How it works

Kubernetes follows the controller/worker model. Its core components are deployed on controller nodes, which are only responsible for managing the system, and the actual containers are run on worker nodes. Controller nodes run a few Kubernetes components like the API server, which is the “brain” of everything, and scheduler, which is responsible for scheduling containers. You can also find an eCTD server on controller nodes, and that's where Kubernetes stores all its data. Worker nodes run small components called kubelet and kube-proxy, which are responsible for receiving and executing orders from the controller as well as managing containers.

Extending Kubernetes

Kubernetes comes with many built-in object types that you can use to control the behavior of the platform. But you can also extend it by using Custom Resource Definitions (CRDs). This makes Kubernetes very flexible and allows you to customize it to your needs, expanding the core integrations of the platform to reach other systems such as storage and networking components (as well as MANY others).

On top of that, Kubernetes also allows you to build operators, which gives you the almost unlimited ability to implement your own logic to Kubernetes. Operators and CRDs are part of the reason for the huge popularity of Kubernetes. The ability to extend Kubernetes and implement your own logic according to your business needs is very powerful. It’s worth mentioning here that both CRDs and Operators can be used even on managed Kubernetes clusters.

OpenShift

Created by Red Hat, OpenShift is another container orchestration platform, largely inspired by Kubernetes. Its main focus is to provide a Kubernetes-like platform for running your containers on-premises, in a private cloud, or in hybrid cloud environments.

There are, however, many differences too: the main being the concept of build-related artifacts. OpenShift implements such artifacts as first-class Kubernetes resources. Another difference is that Kubernetes doesn’t really care about the underlying operating system, while OpenShift is tightly coupled to Red Hat Enterprise Linux. On top of that, OpenShift comes with many components, which, in vanilla Kubernetes, are optional extras. For example, Prometheus for monitoring or Istio for service mesh capabilities.

Overall, while Kubernetes leaves all the control and choices to the user, OpenShift tries to be a more complete package for running applications within enterprises.

Managed Kubernetes services

Remember when we talked about managed Kubernetes? These are a few examples of Kubernetes-as-a-service. Microsoft’s Azure Kubernetes Service (AKS), Amazon Web Services’ (AWS’s) Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), and IBM Cloud Kubernetes service are all well known Kubernetes offerings from public cloud providers.

They all work the same as a normal Kubernetes cluster, however, you don’t have access to controller nodes, as the cloud provider manages the nodes. This can be good or bad, depending on what your needs are. On one hand, this relieves you of the installation and operation task of Kubernetes itself, so you can focus more on your containers. On the other hand, if your company requires some very customized Kubernetes options, you’ll be limited. Without access to controller nodes, you won’t be able to change all Kubernetes options.

Summary

The point of containers is to simplify and speed up the way you develop and ship applications. Instead of worrying about all the libraries and other dependencies, you can just create a container that will run anywhere (relatively) without any major changes or adjustments. We wouldn’t need any extra tools and platforms to help us manage containers if we weren’t moving to microservices at the same time.

Splitting an application into many smaller pieces affords many advantages, but also forces us to take care of a large number of containers. And the larger the number of containers you have, the more time that’s required to manage them. That’s why container orchestration platforms have become a core component of modern app architectures. By heavily integrating with the underlying infrastructure, they’re helping us not only with containers but the whole infrastructure management. This is especially true in cloud environments, where container orchestration platforms can take care of networking, storage, and even provisioning new VMs to the cluster based on fully customizable configurations.

The “orchestration” part of container orchestration platforms is where the real strength of these tools comes into play: helping you keep applications up and running in a stable state, while also giving you extensibility, resilience, and lifecycle control.

***

Development teams use LaunchDarkly feature flags to simplify migration use cases, especially in monolith to microservices scenarios. Feature flags give teams a great deal of control when performing these migrations, both from a feature release standpoint, as well as user targeting. They allow you to gradually move parts of your application from the old system to the new one, rather than make the transition in a large, sweeping fashion. Moreover, LaunchDarkly feature flags provide a mechanism by which you can disable a faulty microservice during the migration in real time, without having to redeploy the application or service—something we commonly refer to as a kill switch.

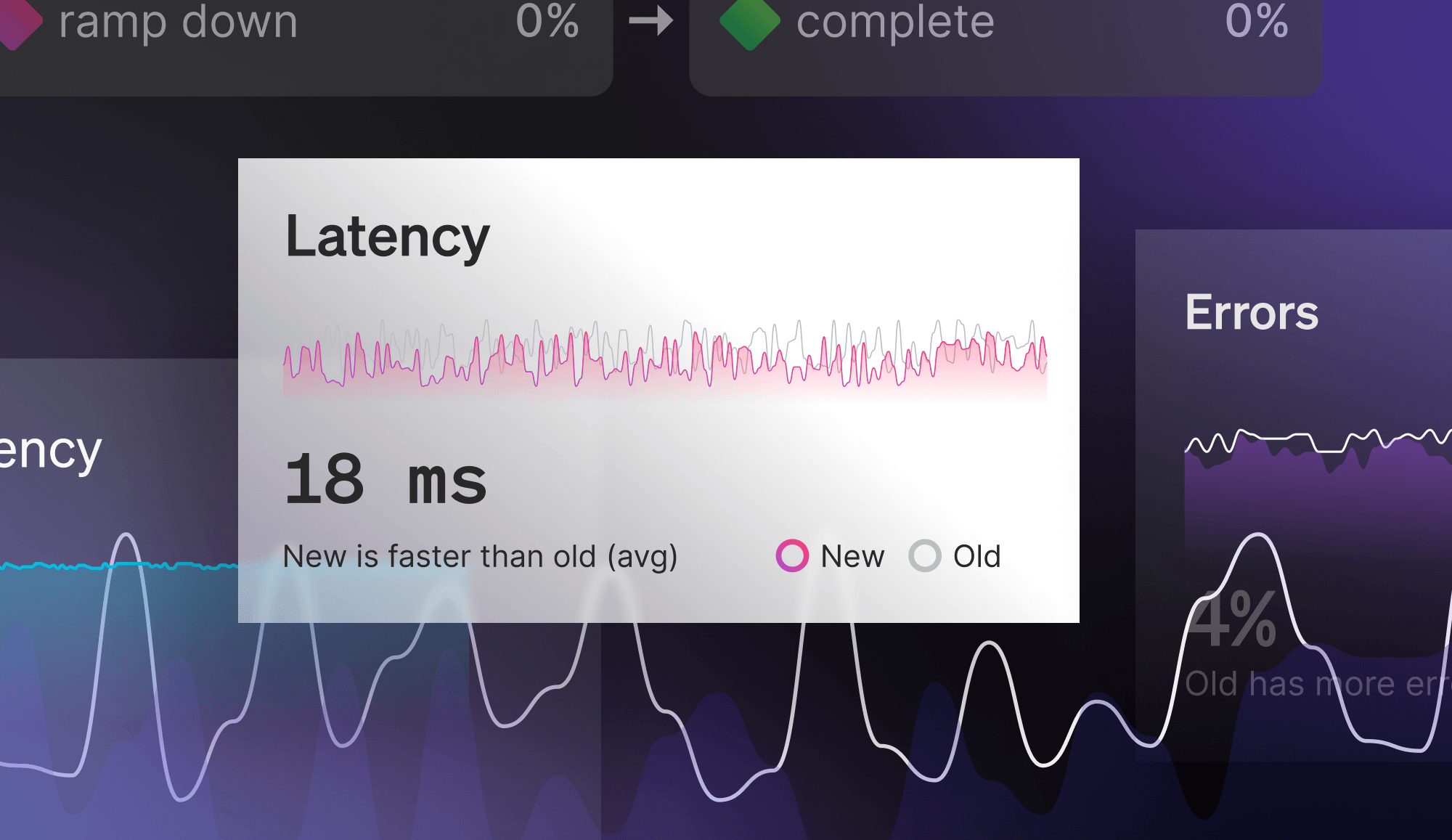

If your team is familiar with the technique of feature flagging, ultimately you can take advantage of this same concept within your microservices workloads. Often, with homegrown or open-source feature flag management systems, it can be challenging to orchestrate feature flags across multiple microservices as you need to build that logic into how you coordinate these changes. For example, you may have a feature spanning multiple microservices. Say you want to disable this feature across all the different services at once. With a homegrown system, it’s not uncommon for developers to have to manually toggle a flag for the same feature across each service. With LaunchDarkly, you can toggle a single flag, which will, in turn, change the behavior of the feature in question across all the different services in under 200 milliseconds.

All told, LaunchDarkly’s feature management platform is an ideal solution for giving fine-grained control over all the features in your application within the context of container-based and microservices architectures.

.png)